Published: September 7, 2025

Last updated: January 7, 2026

In this article, you’ll learn:

You open a recording because you need one thing: the decision, the quote, the moment someone said “Yes, we’ll do it.”

You even have a transcript. Great.

But five minutes later you’re still replaying the audio, because the text doesn’t tell you who’s speaking — and without that, the transcript turns into a blur.

That’s exactly where speaker recognition technology helps.

Instead of a transcript that reads like one long paragraph, you get a transcript that looks like a real conversation, with speaker labels and timestamps. This is what makes audio transcription actually usable — for quoting, summarizing, and finding the right moment without scrubbing through the whole file.

Speaker Diarization, Speaker Identification, Speaker Verification: What People Usually Mean

A big reason this topic is confusing is that “speaker recognition” can mean different things depending on the context and the speaker recognition systems you’re talking about.

- Speaker diarization answers: “Who spoke when?” It detects speaker changes, separates multiple speakers, and labels turns as Speaker 1, Speaker 2, etc. This is what helps with meetings, interviews, podcasts, webinars, training, and most “who said what” tasks.

- Speaker identification answers: “Which known person is speaking?” This is about speaker identity when you have known speakers (for example, an internal directory, an agent list in call centers, or a set of approved participants).

- Speaker verification answers: “Is this the same person as X?” This is common in security workflows and voice biometrics, where you want to verify identity (often as a yes/no or a confidence score).

In this guide, we focus mainly on speaker diarization, because it’s the most practical “day-to-day” use of speaker recognition technology for content teams.

Why Transcripts Without Speaker Labels Still Slow Teams Down

A raw transcript tells you what was said, but it doesn’t help you understand the conversation quickly.

If you’ve ever tried to extract quotes from an interview, write meeting notes, or reuse a webinar, you know the pain: you still replay sections just to figure out who asked the question, who answered, and who committed to the next step. The time is in interpretation.

Speaker labeling removes that “untangling tax,” especially when you handle large volumes of recordings and audio files across teams.

How Much Time Speaker Recognition Can Save (At a Glance)

| Audience / Use case | Typical task | Manual baseline (per 1 hr audio) | With AI transcript + speaker recognition | Time saved (typical) | Notes |

|---|---|---|---|---|---|

| Journalists & UX researchers | Interviews, focus groups; pull quotes, Q/A mapping | ~4–6 hrs to hand-transcribe; more for noisy audio | Draft in minutes; ~2 hrs to post-edit a 60-min file when ASR is high quality | ~50–70% vs. manual (often higher for long sessions) | Speaker labels cut re-listening to figure out “who said what.” |

| Podcasters & video editors | Logging, paper edits, splitting host/guest dialogue | ~2 hrs to log a 60-min episode | ~1 hr with transcripts that include speaker tags + timestamps | ~50% less logging time | Speaker tags make pulls and edits faster; fewer scrubs in the timeline. |

| Business teams & PMs | Meeting notes, action items, ownership tracking | 5–8 hrs/week writing minutes & follow-ups (heavy meeting load) | AI minutes with speaker IDs; summaries in minutes | ~70–90% on minutes; ~3.5–7+ hrs/week back | Users report 4–10+ hrs saved per week with AI note-taking. |

| Educators & legal settings | Lectures, hearings, depositions; precise quoting | ~4–6 hrs per audio hour (or more) to transcribe + structure | Diarized transcripts speed up indexing, citations, and summaries | Hours saved per session (varies by quality and formality) | Speaker labels reduce verification time for who asked/answered. |

How Speaker Diarization Works

Under the hood, speaker diarization is a speech processing task. It takes an audio signal, analyzes acoustic features, and tries to decide which segments belong to the same speaker vs different speakers.

A typical pipeline looks like this:

- First, the system finds where speech happens (vs silence). Then it splits speech into small segments and extracts features from the audio. Modern speaker recognition models often convert each segment into a compact representation called speaker embeddings — a vector that captures speaker characteristics such as pitch-related patterns and other unique voice characteristics of a speaker’s voice.

- Then the system compares segments to group them. One common approach is measuring similarity between embeddings (for example, using cosine similarity) to cluster segments that likely belong to the same speaker, and separate them from other speakers. Older approaches sometimes relied on gaussian mixture models, while many current systems use deep neural networks, machine learning, and large-scale training data to improve system performance.

Finally, the transcript is labeled with timestamps: Speaker 1 / Speaker 2 / Speaker 3.

In short: diarization is segmentation → representation → grouping → labeling.

Where Speaker Recognition is Used

Speaker recognition systems show up in very different places.

In call centers, speaker identification can help tag who is speaking (agent vs customer), support QA workflows, or power person-based analytics across real time applications.

In security contexts, speaker verification and voice biometrics can be used to verify identity (with strict policies and privacy requirements). In some domains — including criminal investigations — voice data may be used as part of broader investigative work (with careful legal and ethical constraints).

But for most business teams creating and managing content, diarization is the workhorse: it helps you turn recordings into something searchable and reusable.

What Speaker Labels Unlock in Day-to-Day Work

Speaker labels change how quickly you can go from “we recorded it” to “we can use it.”

For customer interviews and research, diarization makes it easier to pull quotes and build summaries without replaying everything. You spend less time guessing who spoke, and more time actually processing insights.

For podcasts and webinars, it becomes much easier to locate key moments and create clips. When the transcript is speaker-aware, editing decisions are faster because the structure is visible at a glance.

For internal meetings, the value is often underrated: it’s not only about readability, it’s about accountability. When a transcript clearly separates speakers, it’s easier to track decisions, follow-ups, and ownership without relying on memory.

Accuracy: What Affects Results

Speaker diarization can be very good, but it’s limited by the recording and the environment.

It works best when audio is clean, speakers take turns, and microphones are close. It gets harder in noisy environments, echoey rooms, or when people interrupt each other — overlapping speech is still one of the biggest challenges in speaker diarization.

More speakers typically means more complexity. With many participants and rapid turn-taking, a system may split one person into multiple labels or merge two speakers into one. This is a normal tradeoff for real-world audio.

If you need strict identity decisions (“Is this John?”), you’re no longer in diarization territory — you’re in speaker identification/verification, often with additional requirements (known speakers, enrollment samples, and higher security standards).

A Practical Way to Improve Accuracy

The fastest way to improve accuracy is to improve input quality. Small changes make a big difference:

- Use headsets when possible.

- Avoid a single speakerphone in a large room.

- Encourage people not to talk over each other.

- Reduce echo and background noise.

- For interviews, separate microphones or separate tracks can dramatically help.

If you’re operating in multilingual teams, look for multilingual support — language and accent variation can impact system performance, and some systems handle cross-language conditions better than others.

If your product supports configuration, you can sometimes fine tune speaker recognition models (or adjust settings) using task-specific training data or curated samples, but you don’t need that to get value in everyday workflows.

Example: Transcript Before vs After Speaker Labels

Here’s what “usable” looks like.

Before:

00:00 We should launch the campaign next week.

00:04 I’m worried the landing page isn’t ready.

00:07 We can ship the first version and iterate.

00:10 What about legal review?

00:12 It’s scheduled for Friday.

After:

Speaker 1 (00:00–00:05): We should launch the campaign next week.

Speaker 2 (00:04–00:08): I’m worried the landing page isn’t ready.

Speaker 1 (00:07–00:11): We can ship the first version and iterate.

Speaker 3 (00:10–00:13): What about legal review?

Speaker 2 (00:12–00:15): It’s scheduled for Friday.

Same words. Totally different usability. With speaker labels, you can scan, quote, attribute, and find the moment in seconds — even when there’s an unknown speaker or a new participant you didn’t expect.

Why This Matters Inside a DAM

A transcript is helpful, but a transcript with speaker labels is reusable.

When speaker-labeled transcripts live next to your recordings inside a DAM, your library stops being a pile of “things we recorded” and starts acting like searchable knowledge. You can access the right moment faster, reuse quotes without guesswork, and share recordings with context instead of sending a 45-minute file and hoping someone finds the good part.

This is where “AI features” become real workflow improvements: speed, clarity, and less time wasted searching.

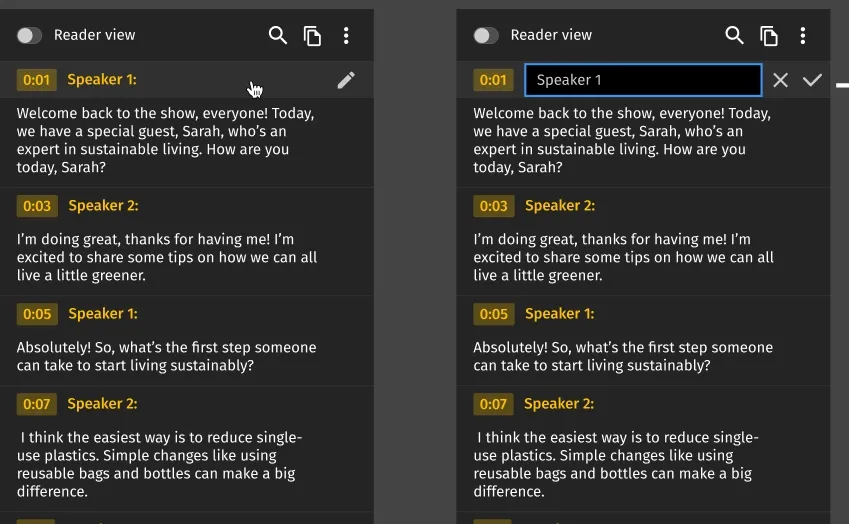

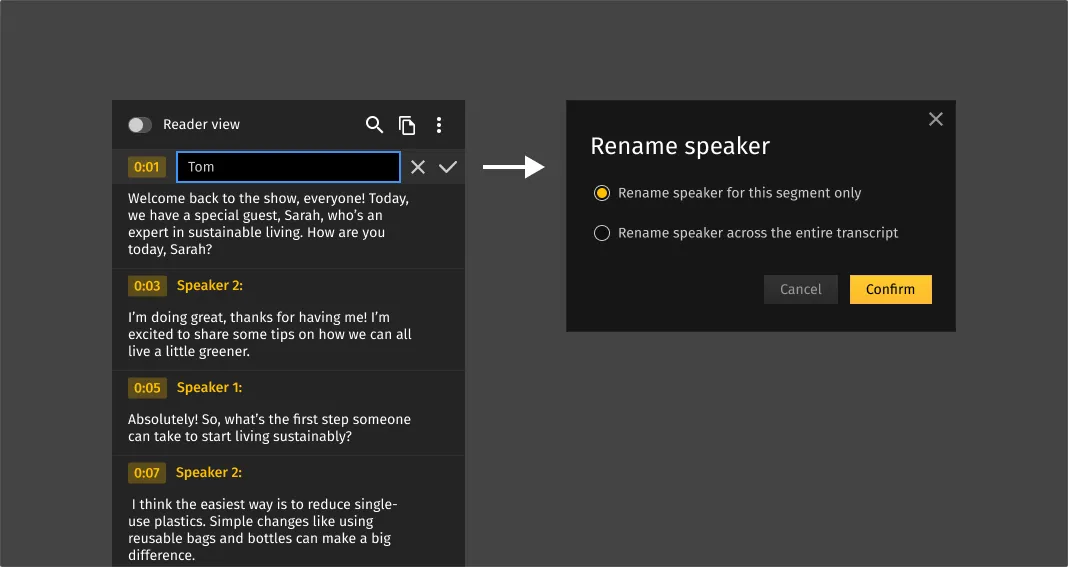

A typical workflow in Pics.io

Most teams start simple. They store recordings in the library where they already keep assets, run transcription/analysis, review the transcript during playback, and search the text to jump to key moments. From there, the content becomes easy to reuse: copy a quote into a doc, pull the timestamp for a highlight, or share the asset knowing the transcript is readable and structured.

You can rename speakers and quickly find assets where they appear — right inside your DAM.

If you’re dealing with many files across departments and languages, this approach scales well — it’s designed for handling large volumes of audio and video content without turning your process into chaos.

FAQ

Is speaker diarization the same as speaker recognition?

“Speaker recognition” is often used broadly. Speaker diarization labels who spoke when. Speaker identification and speaker verification focus on identity.

Is this text-dependent or text-independent?

Most diarization and many modern speaker recognition systems are text independent systems: they don’t require a specific phrase. Text dependent systems are more common in strict verification scenarios where a person must say a specific passphrase.

Can it label real names automatically?

Diarization typically outputs Speaker 1/2/3. Assigning real names is a speaker identification problem and usually requires known speakers and enrollment voice data.

What’s the biggest reason diarization struggles?

Overlapping speech, plus echo and far-away microphones.

Is this voice biometrics?

No. Diarization groups segments to label a conversation. Voice biometrics is identity-focused verification/identification and usually has stricter data, privacy, and security requirements.

Key Takeaways

Speaker diarization answers a simple question — “who spoke when” — but the impact is big. It makes audio transcription readable, searchable, and reusable. It also makes recordings far more useful inside a DAM, where speed and access matter every day.

If your team already uses transcripts but still wastes time replaying recordings, speaker labels are usually the missing piece.

Did you enjoy this article? Give Pics.io a try — or book a demo with us, and we'll be happy to answer any of your questions.

Author

John ShpikaJohn Shpika is a software engineer, entrepreneur, and the founder of Pics.io. He’s been building software since 1999 and launching products and companies since 2012, with experience across enterprise architecture, product development, and operations. He’s also the author of What is Digital Asset Management?: Discover the best practices for your business (Kindle).